The concept of global catastrophic risk is customarily defined in human terms. Details vary, but a global catastrophe is almost always regarded as something bad that happens to humans. However, in moral philosophy, it is often considered that things that happen to nonhumans can also be bad—and likewise for good things. In some circumstances, whether and how nonhumans are valued may be the difference between extremely good or catastrophically bad outcomes for nonhumans. This raises the question of how nonhumans may be valued. To help address that question, this paper presents a survey of ideas about how to value nonhumans.

The genesis of this paper was in GCRI’s recent work on the ethics of artificial intelligence [1]. This work argues that AI ethics should include the moral value of nonhumans. The design of AI systems, especially advanced future AI systems, is one setting in which the valuation of nonhumans can be extremely important. Our prior work on AI ethics was able to develop this point, but it was not able to elaborate in detail the options for valuing nonhumans. Literature on the moral value of nonhumans is scattered widely and difficult to follow. Therefore, this paper serves to pull that literature together in a more accessible fashion and provide a resource for ongoing efforts to study global catastrophic risks, AI ethics, and related topics from perspectives that are not strictly human-centered.

The paper is specifically on the intrinsic value of nonhumans. To be intrinsically value means to have inherent value, or to be valuable for its own sake. For example, one might seek to prevent a species from going extinct based on the idea that its extinction would be inherently bad. In contrast, one might seek to prevent the species extinction because that species is of some value to humans (or to something else), such as if humans use the species for food or medicine. The idea that species extinctions are inherently bad is one type of idea about the intrinsic value of nonhumans. The paper surveys the full range of such ideas.

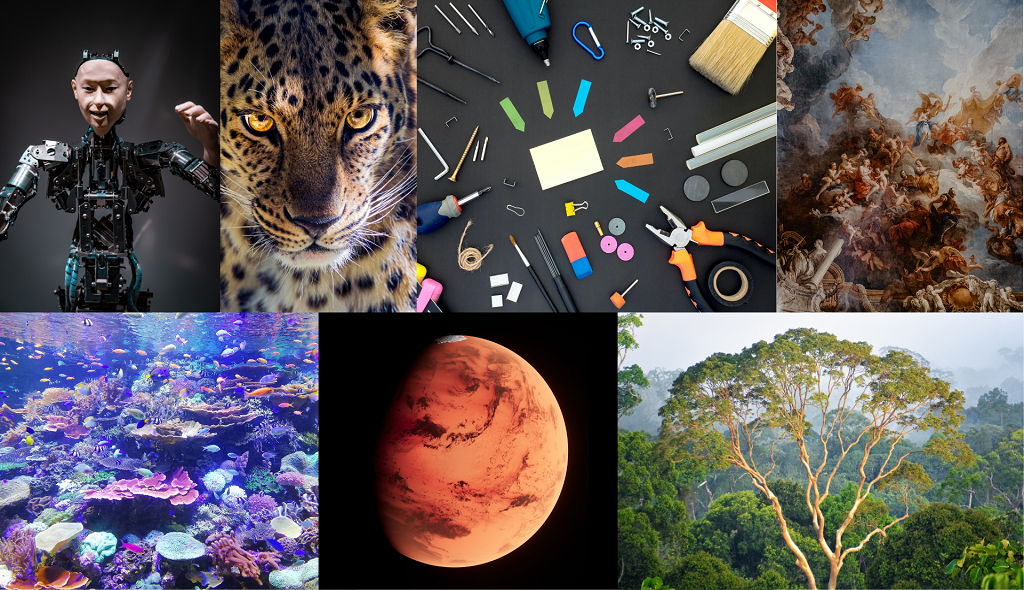

The paper organizes ideas along two dimensions. First, it distinguishes between “natural” and “artificial” nonhuman entities. “Natural” entities are things such as ecosystems, nonhuman animals, and biological life. “Artificial” entities are things created by humans, such as art and technology. Second, the paper distinguishes between “subject-based” and “object-based” moral theories. In “subject-based” theories, moral judgments are made based on some aggregate of the views of some population of moral subjects. An example is democracy, which aggregates the views of voters. In “object-based” theories, moral judgements are made based on what is good or bad for some type of moral object. An example is versions of utilitarianism that seek to maximize things like pleasure or happiness; in this case, pleasure or happiness are the moral objects.

A variety of moral theories can be described in terms of these two dimensions. Some are well established within moral philosophy literature, such as versions of utilitarianism that intrinsically value the pleasure or happiness of nonhuman animals and theories from environmental ethics that intrinsically value ecosystems or biological life. Other theories have gotten less attention, such as on the intrinsic value of artificial ecosystems or artificial life. One surprising finding was that the concepts of diversity and aesthetic quality have gotten very little prior attention in intrinsic value research. Overall, those seeking to intrinsically value nonhumans have a multitude of options available and considerable philosophical resources to draw upon. As this paper demonstrates, the stakes are high: Moral valuations of nonhumans can dominate extremely important issues, from factory farming, deforestation, and global warming, to the creation of artificial life, civilizational expansion into outer space, and the goal-optimization of advanced AI systems.

[1] Prior GCRI research on AI ethics include the papers Moral consideration of nonhumans in the ethics of artificial intelligence, The ethics of sustainability for artificial intelligence, Social choice ethics in artificial intelligence, From AI for people to AI for the world and the universe, and Artificial intelligence needs environmental ethics. A related paper is Greening the universe: The case for ecocentric space expansion.

Academic citation:

Owe, Andrea, Seth D. Baum, & Mark Coeckelbergh, 2022. Nonhuman value: A survey of the intrinsic valuation of natural and artificial nonhuman entities. Science and Engineering Ethics, vol 28, no. 5, article 38. DOI 10.1007/s11948-022-00388-z. ReadCube.

Image credits:

Assorted tools: Dan Cristian Pădureț

Classical art: Adrianna Geo

Robot: Maximalfocus

Coral reef: SGR

Leopard: Dustin Humes

Rainforest: Jeremy Bezanger

Mars: Planet Volumes