View the paper “A Survey of Artificial General Intelligence Projects for Ethics, Risk, and Policy”

Artificial general intelligence (AGI) is AI that can reason across a wide range of domains. While most AI research and development (R&D) is on narrow AI, not AGI, there is some dedicated AGI R&D. If AGI is built, its impacts could be profound. Depending on how it is designed and used, it could either help solve the world’s problems or cause catastrophe, possibly even human extinction.

This paper presents the first-ever survey of active AGI R&D projects for ethics, risk, and policy. The survey attempts to identify every active AGI R&D project and characterize them in terms of seven attributes:

- The type of institution the project is based in

- Whether the project publishes open-source code

- Whether the project has military connections

- The nation(s) that the project is based in

- The project’s goals for its AGI

- The extent of the project’s engagement with AGI safety issues

- The overall size of the project

To accomplish this, the survey uses openly published information as found in scholarly publications, project websites, popular media articles, and other websites, including 11 technical survey papers, 8 years of the Journal of Artificial General Intelligence, 7 years of AGI conference proceedings, 2 online lists of AGI projects, keyword searches in Google web search and Google Scholar, the author’s prior knowledge, and additional literature and webpages identified via all of the above.

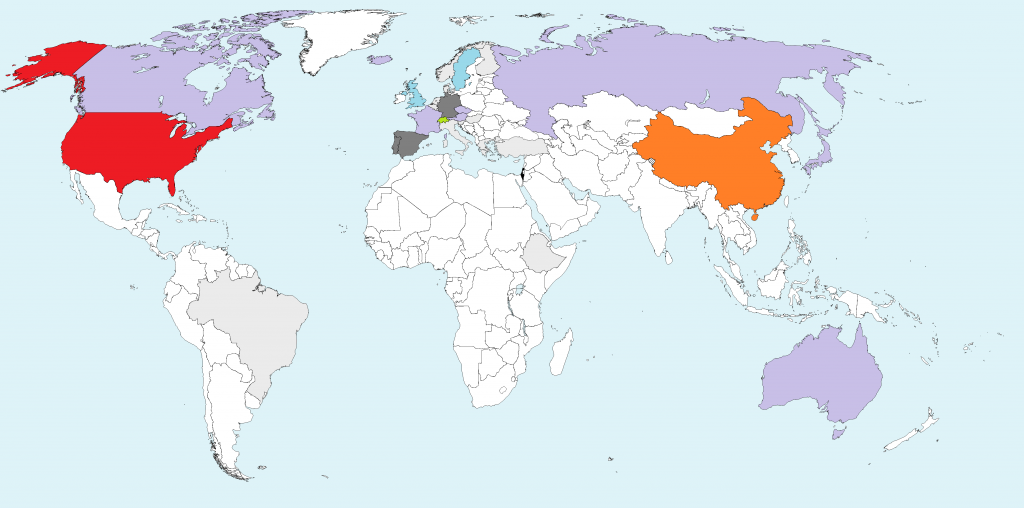

The survey identifies 45 AGI R&D projects spread across 30 countries in 6 continents, many of which are based in major corporations and academic institutions, and some of which are large and heavily funded. Many of the projects are interconnected via common personnel, common parent organizations, or project collaboration.

For each of the seven attributes, some major trends about AGI R&D projects are apparent:

- Most projects are in corporations or academic institutions.

- Most projects publish open-source code.

- Few projects have military connections.

- Most projects are based in the US, and almost all are in either the US or a US ally. The only projects that exist entirely outside US and its allies are in China or Russia, and these projects all have strong academic and/or Western ties.

- Most projects state goals oriented towards the benefit of humanity as a whole or towards advancing the frontiers of knowledge, which the paper refers to as “humanitarian” and “intellectualist” goals.

- Most projects are not active on AGI safety issues.

- Most projects are in the small-to-medium size range. The three largest projects are DeepMind (a London-based project of Google), the Human Brain Project (an academic project based in Lausanne, Switzerland), and OpenAI (a nonprofit based in San Fransisco).

Looking across multiple attributes, some additional trends are apparent:

- There is a cluster of academic projects that state goals of advancing knowledge (i.e., intellectualist) and are not active on safety.

- There is a cluster of corporate projects that state goals of benefiting humanity (i.e., humanitarian) and are active on safety.

- Most of the projects with military connections are US academic groups that receive military funding, including a sub-cluster within the academic-intellectualist-not active on safety cluster.

- All six China-based projects are small, though some are at large organizations with the resources to scale quickly.

The data suggest the following conclusions:

Regarding ethics, the major trend is projects’ split between stated goals of benefiting humanity and advancing knowledge, with the former coming largely from corporate projects and the latter from academic projects. While these are not the only goals that projects articulate, there appears to be a loose consensus for some combination of these goals.

Regarding risk, in particular the risk of AGI catastrophe, there is good news and bad news. The bad news is that most projects are not actively addressing AGI safety issues. Academic projects are especially absent on safety. Another area of concern is the potential for corporate projects to put profit ahead of safety and the public interest. The good news is that there is a lot of potential to get projects to cooperate on safety issues, thanks to the partial consensus on goals, the concentration of projects in the US and its allies, and the various interconnections between different projects.

Regarding policy, several conclusions can be made. First, the concentration of projects in the US and its allies could greatly facilitate the establishment of public policy for AGI. Second, the large number of academic projects suggests an important role for research policy, such as review boards to evaluate risky research. Third, the large number of corporate projects suggests a need for attention to the political economy of AGI R&D. For example, if AGI R&D brings companies near-term projects, then policy could be much more difficult. Finally, the large number of projects with open-source code presents another policy challenge by enabling AGI R&D to be done by anyone anywhere in the world.

This study has some limitations, meaning that the actual state of AGI R&D may differ from what is presented here. This is due to the fact that the survey is based exclusively on openly published information. It is possible that some AGI R&D projects were missed by this survey. Thus, the number of projects identified in this survey, 45, is a lower bound. Furthermore, it is possible that projects’ actual attributes differ from those found in openly published information. For example, most corporate projects did not state the goal of profit, even though many presumably seek profit. Therefore, this study’s results should not be assumed to necessarily reflect the actual current state of AGI R&D. That said, the study nonetheless provides the most thorough description yet of AGI R&D in terms of ethics, risk, and policy.

Academic citation

Baum, Seth D., 2017. A survey of artificial general intelligence projects for ethics, risk, and policy. Global Catastrophic Risk Institute Working Paper 17-1.

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.